NVIDIA Shows Off Its Upcoming H200 GPUs For AI Computing

Published on November 17, 2023

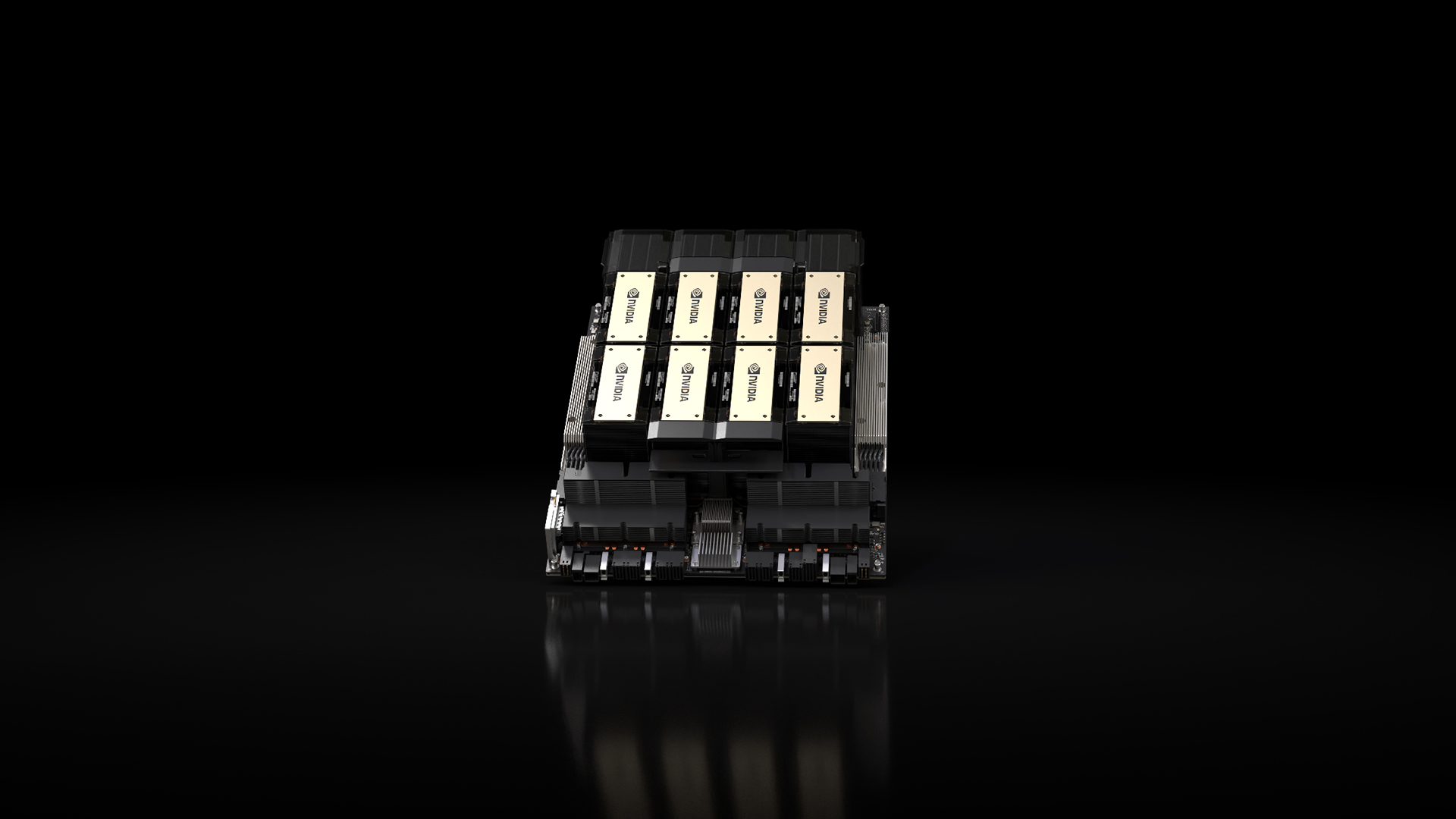

Nvidia has recently announced the HGX H200 computing platform, which features the upcoming H200 Tensor Core graphics processing unit based on its Hopper architecture. It is is the first GPU to offer 141 gigabytes (GB) of HBM3e memory at 4.8 terabytes per second (TB/s). This is nearly double the capacity of the NVIDIA H100 Tensor Core GPU with 1.4X more memory bandwidth.

The H200 Tensor Core GPU is expected to be released in Q2 2024. It is a significant upgrade from its predecessors, the H100 and the A100. The H200 Tensor Core GPU is expected to boost inference speed by up to 2X compared to H100 GPUs when handling large language models like Llama2. It is also expected to lead up to 110X faster time to results compared to CPUs for memory-intensive HPC applications like simulations, scientific research, and artificial intelligence.

The H200 Tensor Core GPU is a game-changer for generative AI and HPC workloads. It is expected to supercharge generative AI and LLMs while advancing scientific computing for HPC workloads with better energy efficiency and lower total cost of ownership. The H200 Tensor Core GPU is a significant step forward in the field of AI and HPC, and it will be exciting to see how it will be used in the future.

Sources:

https://www.nvidia.com/en-us/data-center/h200/

https://nvidianews.nvidia.com/news/nvidia-supercharges-hopper-the-worlds-leading-ai-computing-platform